Problem

I just upgraded to OSX Yosemite. It looks pretty cool and works fine. In addition to Java, CSS, Javascript we are writing a lot of PHP while develop data management solution MageFlow for Magento.

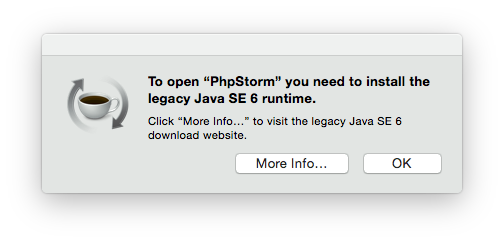

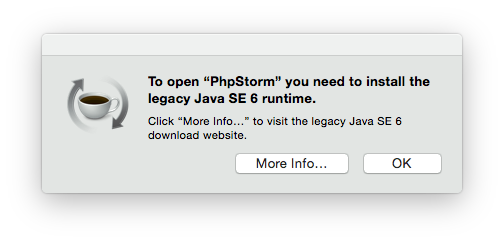

I noticed a problem when I tried to start my everyday IDE PhpStorm:

Stop! I just upgraded to a fresh, new OSX and I’m forced to install an almost 10 year old Java? Nope…

I have Java 8 installed to my Mac and I thought it would be cool to run PhpStorm on top of that one.

So I looked around and the solution is surprisingly simple.

Solution 1 (deprecated – see the update)

Open file /Applications/PhpStorm.app/Contents/Info.plist with your favorite editor (mine is vim)

Find the following tag:

<key>JMVVersion</key>

Below that one there should be

<string>1.6*</string>

or similar.

Replace 1.6* with

<string>1.8*</string>

Start PhpStorm.

😉

Important update

It’s important to know that changing the Info.plist file would break the application’s digital signature. There are consequences like the app asking for firewall permissions on each start and not the patches not being applied properly. See more info on JetBrains Support page.

In short you need to add the wanted Java version to a preference file instead of hacking application’s Info.plist.

In my case I created file ~/Library/Preferences/WebStorm9/idea.properties with contents:

JVMVersion=1.8*

This applies for WebStorm but it’s done the same way for all JetBrains IDE-s. Just change the app name in the path.

For PhpStorm put the file to:

~/Library/Preferences/WebIde80/idea.properties